ETL Pipeline for Vendor Data Synchronization

Large-scale data pipeline processing 500k+ vendor records

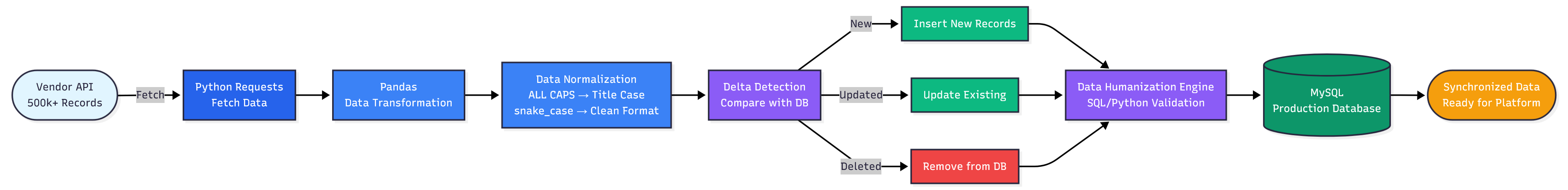

ETL Pipeline for Vendor Data Synchronization (500,000+ Records)

Overview

A large-scale ETL pipeline system designed to automate the ingestion, normalization, and consolidation of vendor data. The system processes 500,000+ records, maintaining bidirectional synchronization with vendor datasets—handling additions, updates, and deletions to ensure the production database accurately reflects vendor changes. Replaces manual workflows with automated data synchronization and validation processes.

Problem

Vendor sent inconsistent, fragmented bulk datasets requiring manual cleaning. The vendor's data changed frequently—records were added, updated, or deleted—but there was no automated way to keep the production database synchronized. No automation existed, leading to:

- Multiple days of manual processing time per dataset

- Stale data in production database that didn't reflect vendor changes

- Duplicate and inconsistent entries in the production database

- Unreliable downstream reporting systems

- High error rates in data processing workflows

Solution

Designed a two-part ingestion system to automate the entire data pipeline:

Python ETL Pipeline

- Fetches large datasets (~500,000 rows) via external API

- Uses Pandas for efficient data manipulation and transformation

- Performs bidirectional data consolidation: handles additions, updates, and deletions from vendor

- Compares vendor dataset with production database to identify changes

- Updates existing records when vendor data changes

- Removes records from production database when deleted by vendor

- Consolidates and normalizes fields across different data formats

- Deduplicates and checks for existing records

- Performs safe upserts and deletions into production MySQL database

- Ensures production database accurately reflects vendor's current state

- Adds comprehensive logging and retry logic for reliability

In-Database Cleaning Engine

- SQL + Python layer for data validation and sanitization

- Fixes inconsistent values and normalizes formats

- Enforces uniqueness constraints and business rules

- Reconciles discrepancies across vendor datasets

Architecture

The system follows a modular, production-ready architecture:

- ETL Layer: Python scripts with Pandas handle API interactions, data transformation, normalization, and batch processing

- Database: MySQL stores normalized vendor records with proper indexing

- Cleaning Engine: SQL stored procedures and Python validation scripts ensure data quality

- Automation: Cron jobs schedule regular data synchronization

- Logging: Comprehensive logging system tracks processing status and errors

Technical Breakdown

Key Technologies

- Python for ETL pipeline development and data processing

- Pandas for efficient data manipulation, transformation, and normalization of large datasets

- MySQL for production database storage

- SQL for in-database data cleaning and validation

- Requests library for API interactions

- Cron for scheduled automation

- Logging for monitoring and debugging

Challenges Solved

- Large-Scale Data Processing: Handled 500,000+ records efficiently with batch processing and incremental inserts

- Bidirectional Data Consolidation: Implemented logic to detect and synchronize additions, updates, and deletions from vendor, ensuring production database reflects vendor's current state

- Data Normalization: Consolidated fragmented datasets with varying formats into a unified schema

- Data Quality: Implemented validation rules to catch inconsistencies before production insertion

- Safe Incremental Updates: Designed upsert and deletion logic to prevent duplicates while updating existing records and removing deleted ones

- Error Handling: Built robust retry mechanisms and logging for production reliability

Results

- Time reduction: Manual processing time reduced from multiple days to under 5 minutes

- Data quality: Eliminated duplicate and inconsistent entries

- System stability: Stabilized downstream reporting systems

- Error reduction: Improved reporting accuracy and reduced errors by 80%+

- Data reliability: Improved data reliability across the entire platform

What I Learned

Building this ETL pipeline taught me the importance of designing for data quality and synchronization from the start. Implementing bidirectional consolidation—handling not just additions but also updates and deletions—was crucial for maintaining data accuracy. The challenge of detecting and applying vendor changes while preserving data integrity required careful design of comparison logic and safe update/deletion strategies. Implementing validation at multiple stages (API fetch, transformation, and database insertion) was essential for maintaining data integrity across all operations.

Next Steps

Future enhancements could include:

- Real-time data synchronization instead of batch processing

- Advanced data quality metrics and monitoring dashboards

- Support for additional vendor data formats

- Automated alerting for data quality issues

Key Features & Capabilities

Large-scale ETL pipeline processing 500k+ vendor records

Bidirectional data consolidation handling additions, updates, and deletions

Data normalization and field consolidation

Safe incremental upserts and deletions into production database

In-database data cleaning engine with validation

Comprehensive logging and retry logic

Automated scheduled synchronization maintaining database-vendor alignment